Welcome everyone to The Main Thread, dissecting the mechanics of distributed systems. This issue is a part of a tetralogy (another 😀 ) where we will dive deep into Caching.

Learning about hidden patterns in caches is important because the speed-consistency trade-offs trip even pro engineers. In this issue, we will arm ourselves with principles, code, and strategies - no fluff.

Cache is a bargain: speed for potential lies. Master it, or debug forever

Let’s jump in.

Core Concept: Speed’s Dark Side

A cache is fast storage (memory) between our app and slow backing store (databases/APIs).

When the application requires some data to be read, it first checks in cache, if it is found, then the data is returned. This operation is very fast. However, if the data is not found in the cache, the data has to be retrieved from the slow backing store.

Therefore, the goal of caches is to cut latency and ease load on hot data. However, this has a downside as well, we have a potential risk of reading stale data.

Caching Patterns

Broadly, there are four common caching patterns:

1. Write Aside

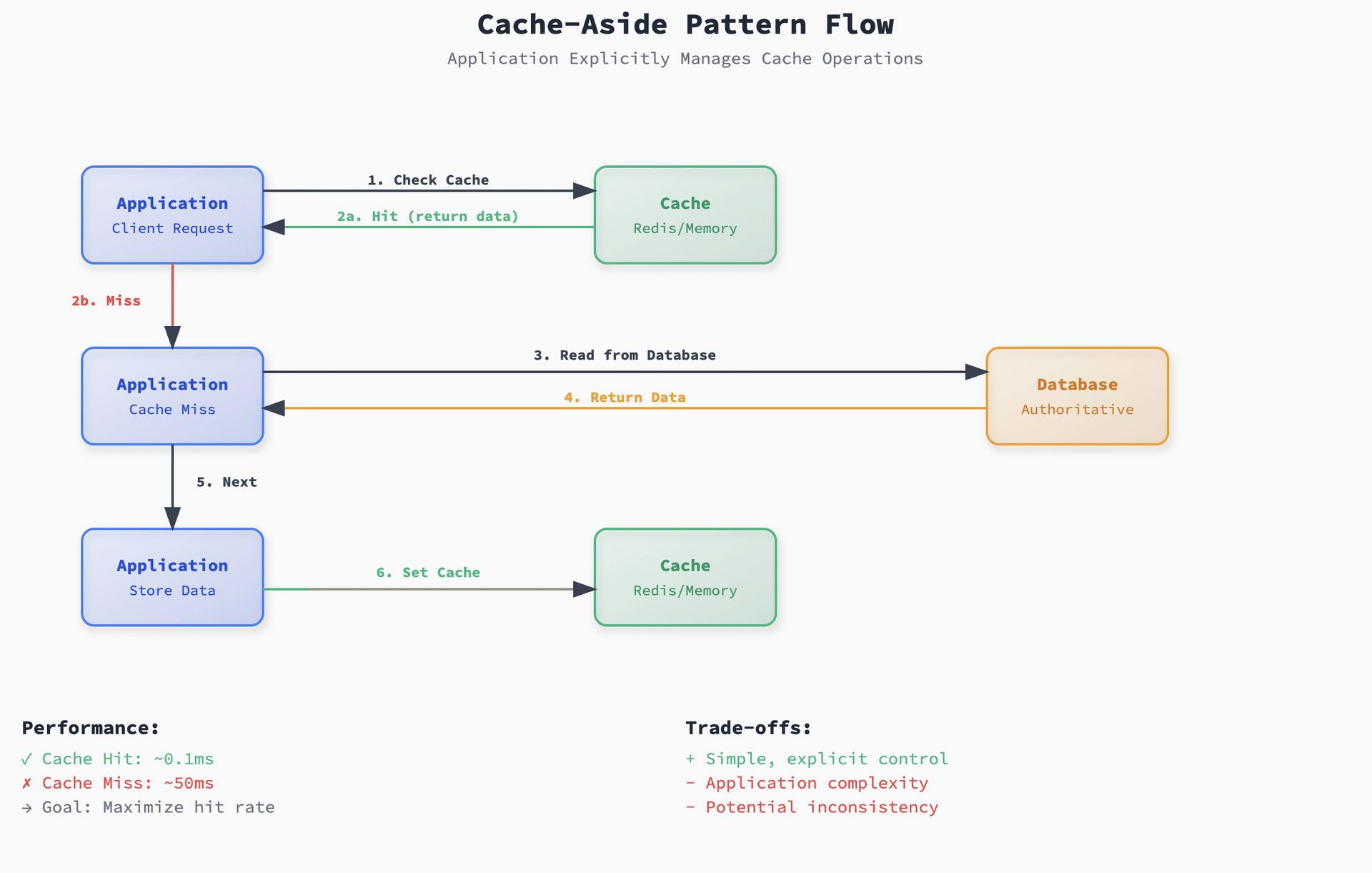

The most common pattern - our application explicitly manages the cache

Cache Aside

Pros: Simple, explicit control over what gets cached.

Cons: Application handles cache logic, potential for inconsistency.

def get_user(user_id):

# 1. Check cache first

user = cache.get(f"user:{user_id}")

if user:

return user # Cache hit

# 2. Cache miss - read from database

user = database.get_user(user_id)

# 3. Populate cache for next time

cache.set(f"user:{user_id}", user, ttl=300) # 5 min TTL

return user2. Read-Through

The cache automatically handles data loading on cache misses.

Read Through pattern

Pros: Simplifies application code, ensures cache always has fresh data on first access.

Cons: Adds latency on cache misses, cache layer needs database access.

def get_user_profile(user_id):

# Cache handles DB lookup automatically

return read_through_cache.get(f"profile:{user_id}")

class ReadThroughCache:

def get(self, key):

value = self._cache.get(key)

if value is None:

# Cache automatically loads from database

value = self._load_from_database(key)

self._cache.set(key, value, ttl=300)

return value3. Write Through

Cache layer synchronously updates both cache and database.

Write Through pattern

Pros: Strong consistency, cache always has fresh data, no cache invalidation needed

Cons: Higher write latency, write failures affect both cache and data.

def update_user_profile(user_id, profile_data):

# Both cache and database updated together

write_through_cache.set(f"profile:{user_id}", profile_data)

class WriteThroughCache:

def set(self, key, value):

# Write to database first (ensures durability)

self._database.write(key, value)

# Then update cache (ensures fresh reads)

self._cache.set(key, value)

# Both operations must succeed or transaction fails4. Write Behind

Cache accepts writes immediately, database updates happen asynchronously.

Write Behind

Pros: Low write latency, high write throughput, batch optimizations possible.

Cons: Risk of data loss, eventual consistency, complex failure handling.

def update_user_profile(user_id, profile_data):

# Fast cache update, database writes queued

write_behind_cache.set(f"profile:{user_id}", profile_data)

class WriteBehindCache:

def set(self, key, value):

# Update cache immediately (fast response)

self._cache.set(key, value)

# Queue database write for later (async)

self._write_queue.enqueue(key, value)

# Risk: data loss if cache crashes before flush

def _background_flush(self):

# Periodic or event-driven database updates

while True:

batch = self._write_queue.get_batch(size=100)

for key, value in batch:

try:

self._database.write(key, value)

except Exception as e:

self._handle_write_failure(key, value, e)Pattern Guide

Pattern | Manages | Latency (Write) | Consistency | Use |

|---|---|---|---|---|

Cache-Aside | App | Normal | Eventual | Flexible |

Read-Through | Reads | Normal | Good | Reads dominate |

Write-Through | Sync | Higher | Strong | Fresh critical |

Write-Behind | Async | Lower | Eventual | Speed over safety |

Choose by ratios and tolerance. Pre-warm to avoid cold starts.

Conclusion

We have now seen the how of caching - the common patterns and their trade-offs. But the real design question is how much inconsistency our feature can tolerate. In the next issue we’ll measure that - we’ll introduce staleness ratio, sampling strategies, and the “inconsistency budget” (think: SLO for cache freshness).