A hearty welcome to the second edition of The Main Thread newsletter from the dynamic city of Bangalore. This week I finally started reading “The 48 Laws of Power“ book by Robert Greene and I am finding it an interesting read.

Onto the matter at hand now: B-Trees.

Last issue argued the core idea: “B-Trees are bandwidth optimizers.” This issue assumes you already bought that premise and skips the basics (if not, I encourage you to check it out - I am sure you’ll love it)

In this issue, we will focus on the small engineering choices such as node size, fill factor, split policy, buffer pool - and why they make or break that bandwidth win in production.

Node size vs. page size: the core alignment

Node: a B-Tree page worth of key + pointers

Page: the unit of disk/SSD actually reads/writes

B-Trees work because one disk read returns many comparisons. That only happens when a node roughly matches a disk page (4KB, 8KB, 16KB). But this alignment is a pragmatic balance, not dogma.

Why? Because node size sits at the intersection of two scarce resources:

Disk bandwidth: larger nodes → fewer levels → fewer I/Os.

CPU cache & CPU work: larger nodes → more keys to scan inside the node → more cache misses and CPU cycles.

Mental Model

When to pick smaller pages: when our working set must fit L1/L2 caches aggressively, or when unpredictable large keys would push node traversal out of cache. Smaller nodes reduce in-CPU scanning cost at the expense of more disk reads.

When to pick larger pages: when sequential scans and range queries dominate; because fewer levels lead to fewer I/Os. But the caveat is that larger pages increase memory pressure, and internal binary searches within a huge node cost more CPU and more cache misses.

Rule of thumb

Pick a page/node size that keeps internal nodes comfortably in CPU caches while letting leaf pages be page-aligned to disk. If we care about low tail latency, we should bias slightly to smaller node sizes to reduce worst-case in-node work.

Fill factor: leave slack space deliberately

When a B-Tree page (node) runs out of room for a new key, the engine divides that page into two pages and pushes a separator key up into the parent.

Fill factor = how full you let a page get before you call it “full.”

Why care? A split is not just one extra write - it can cascade up and cause multiple page writes plus metadata updates. A small reserved buffer often prevents these cascade events and makes tail latency predictable.

Fill factor is the single simplest, highest-leverage knob for smoothing write behaviour.

High fill (tight packing): fewer pages, lower read amplification, better density. But inserts cause splits more often and writes become burstier and can cascade into parent writes.

Lower fill (reserved space): fewer splits, more stable latency for writes, but larger index size and a small read penalty.

Postgres’ per-index fillfactor exists for this reason. Choose it by workload:

Read-dominant, mostly cold data (OLAP): high fill factor (→ better density).

Write-heavy or hot ranges (OLTP): lower fill factor (70–85%) to reduce split storms.

Split strategy: reactive vs proactive

There are two common philosophies when pages face overflow:

Reactive: split only when a page overflows. This approach has best space efficiency but also a risk of sharp, synchronous stalls.

Proactive: pre-split or rebalance before full overflow. This approach has smoother latency but you pay in wasted space and extra background work.

Most systems default to reactive for space reasons, but the smartest deployments use a hybrid: reactive by default; proactive rebalancing for hot pages during quiet windows or when monitoring shows unstable latencies.

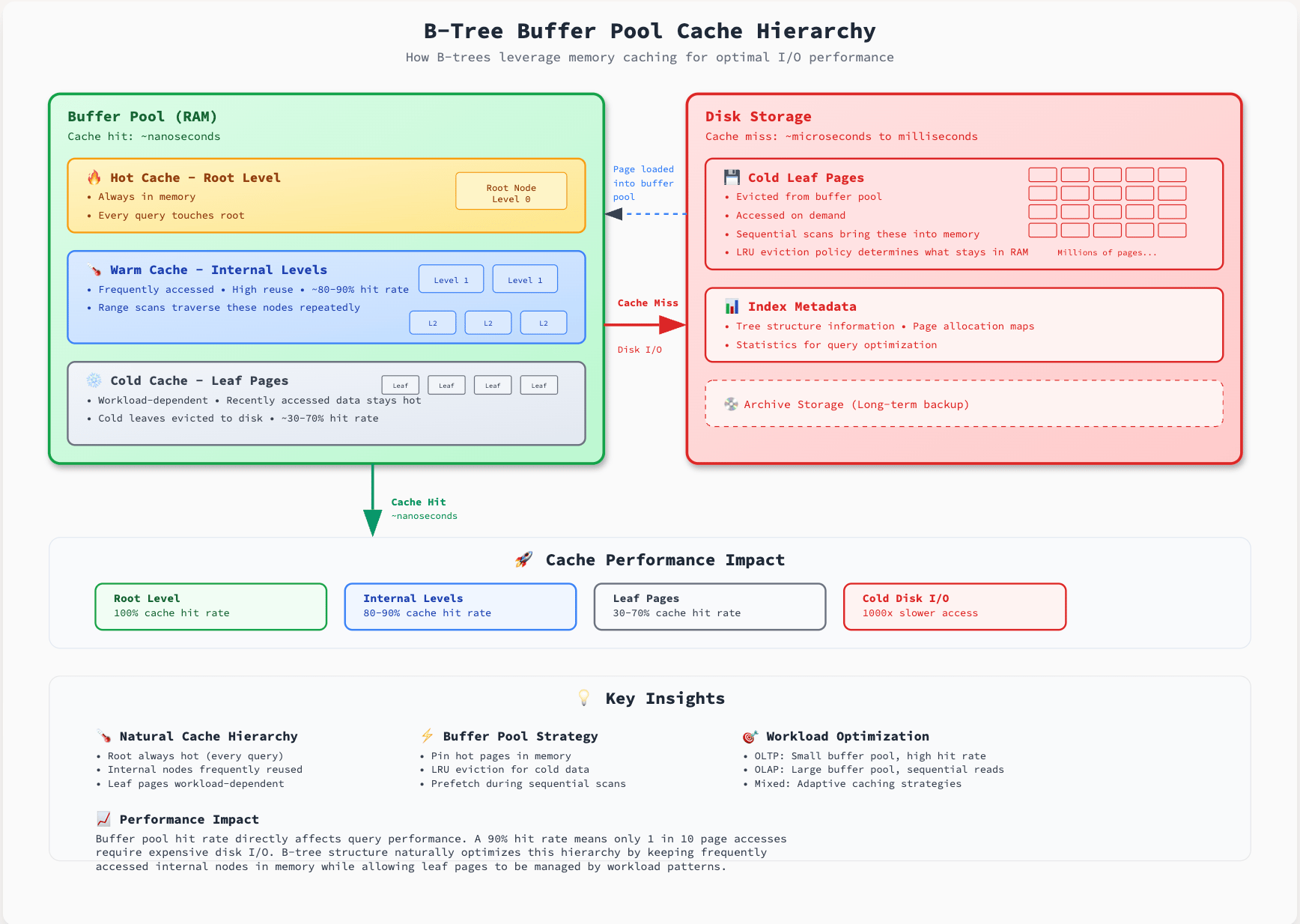

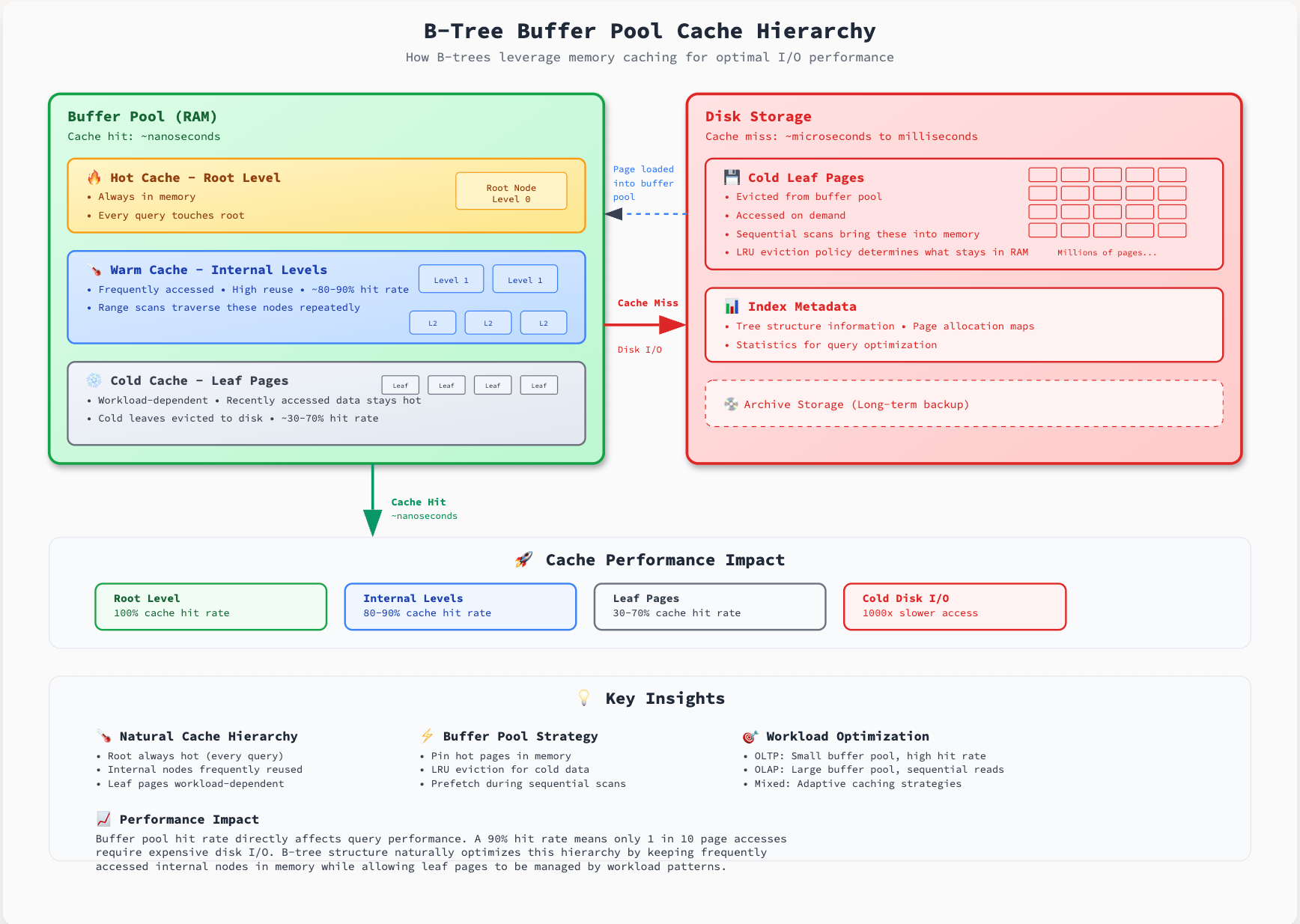

Buffer pool: memory amplifies (or undoes) your choices

Buffer Pool: In-memory cache of pages.

The buffer pool is the multiplier on your B-Tree design. If internal levels stay resident in memory, almost every lookup touches only the leaves on disk. If they don’t, even an ideal node layout will produce more I/O.

A buffer pool is leverage. It changes the effective cost model: internal nodes stay hot; leaves are where the actual battle happens.

Pin the root: keep top nodes hot. They get accessed by almost every lookup.

Size the pool to keep internal levels resident: if internal levels remain cached, most lookups hit only leaves on disk. This is a huge factor in reducing latency

Workload locality wins: hot ranges (recent inserts, active users) should stay in cache to avoid repeated leaf I/Os.

B-Tree Buffer Pool Cache Hierarchy

Practical implication

if you can afford memory, reserve enough to hold the tree’s upper levels plus hot leaf ranges in the buffer pool. If you can’t afford, focus on:

making internal nodes small

reducing churn via lower fill factor or write buffers

using workload shaping to avoid thrashing.

Metric to track: cache hit rate by level - root, internal, leaves. When internal hit rate drops, even well-tuned node/page choices will produce extra I/O.

Short checklist (if you want to act now)

Instrument: page reads/sec, pages split/sec, buffer pool hit by level.

If write spikes cause latency: reduce fill factor for hot indexes; consider background rebalancing.

If reads dominate and memory is available: grow buffer pool to keep internals resident.

If tail latency matters more than storage savings: accept lower packing for predictability.

Takeaway

B-Trees win by optimizing bandwidth, but keeping that win in production is a game of trade-offs: pick node sizes with CPU caches in mind, leave deliberate space where writes are hot, manage splits to avoid tail spikes, and use your buffer pool as the amplifier of whatever design you choose.

Next issue will discuss about concurrency in real-world databases.

But if you can’t wait, here’s the detailed blog post on my website with diagrams, code, and mathematics.

If you liked this, subscribe to this newsletter for deeper, pragmatic breakdowns of database internals and systems design.