Hey curious engineers, welcome to the eighteenth issue of The Main Thread.

Here’s something that should bother you: if you write a prompt in Swahili, you pay roughly 1.8x more than if you write the same prompt in English.

You may think that it is because Swahili is harder to process but this is not the case. It is also not because of some pricing policy.

The real reason is a technical decision made years ago - one that most AI engineers never think about.

The real culprit of this problem is Tokenization.

When we send a text to LLM, we are charged per token. Most foundational LLMs are trained predominantly on English language.

This creates a problem.

English words are efficiently compressed. "The quick brown fox" → 4 tokens

But the non-English text? Not so much.

"Haraka haraka haina baraka" (Swahili proverb) → 9 tokens "速い茶色のキツネ" (Japanese: "quick brown fox") → 8 tokens "Le renard brun rapide" (French) → 5 tokens

As you can see that the semantic content is same but wildly different token counts. And since we pay per token, we pay more.

Why This Happens

Tokenizers learn to split text by finding common patterns in the training data. If “the“ appears 50 million times in training, it gets its own token.

If “haraka“ appears 500 times, it gets split into subwords or worse, individual bytes.

The training data for GPT’s tokenizer was roughly:

90%+ English

~5% other European languages

<5% everything else combined

This means:

English words → usually 1 token

European languages → usually 1-2 tokens

Africal/Asian languages → usually 2-4+ tokens

The tokenizer literally doesn’t know these languages exist as well as it knows English.

The Real-World Impact

It’s not just about API costs. The real-world implications are deeper:

1. Context Window Inequality

The 128k context window sounds huge. But for a Hindi user, it’s effectively 64k. For a Thai user, maybe 50k. The same model offers different capabilities depending on the language.

2. Degraded Reasoning For Non English

More tokens = more steps for the model to process. Research suggests models perform worse on longer tokenizations of the same content. That means more chances for attention to dilute.

3. Compounding Costs In Production

If we are building a product for the Asian market, our unit economics are fundamentally different than a competitor targeting the US. The API is same but costs are different.

Some Numbers

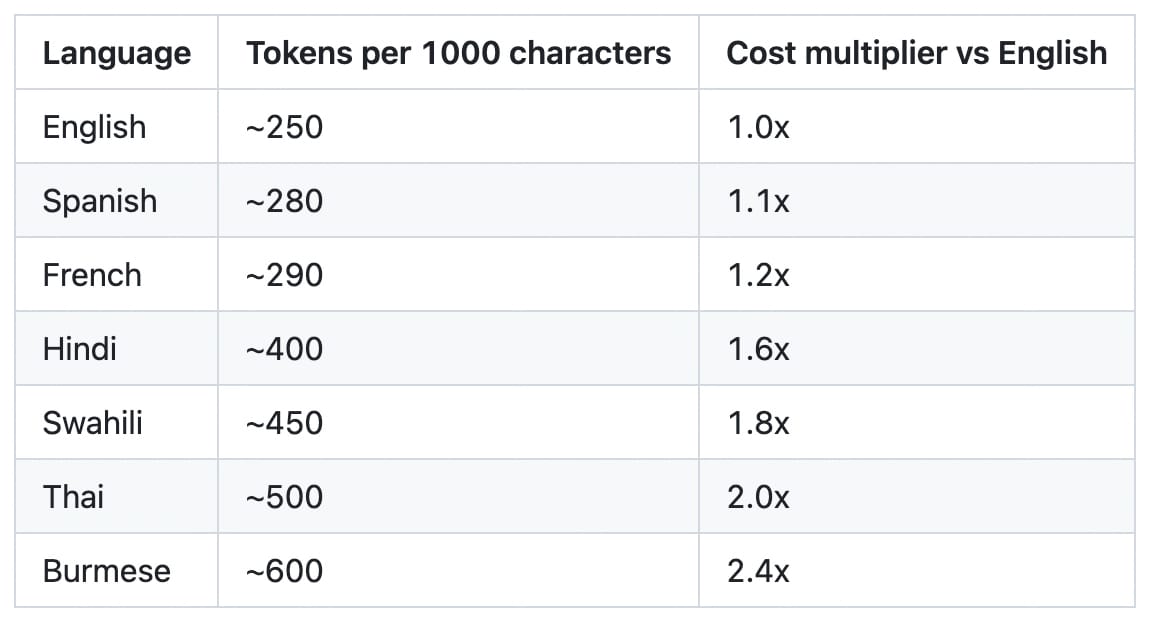

These are approximations based on typical text, actual ratios vary by content.

A Burmese speaker using GPT-4 pays more than double what an English speaker pays for equivalent work.

Why Hasn’t This Been Fixed?

It’s not that nobody knows this problem exists. OpenAI and Anthropic are aware. But fixing it genuinely hard:

1. Retraining is Expensive

The tokenizer is baked into the model. Changing it means retraining from scratch. This costs hundreds of millions (or even billions) of dollars in compute.

2. Backwards Compatibility

Existing fine-tuned models, embedding databases, and applications all depend on current tokenization. Changing it breaks everything.

3. The “Good Enough“ Trap

For the primary market (English-speaking population), it works fine. The people most affected have the least market power.

What Can Be Done

If you are building products:

Measure your tokenization ratio: Know what your non-English users actually pay.

Consider multilingual embedding models: Models like mBERT/XLM-RoBERTa have more balanced tokenizers.

Preprocess strategically: Translation to English before API calls can actually be cheaper for some languages.

If you are choosing Models:

Check the tokenizer, not just the models: Claude, GPT, and Llama have different tokenization.

Test with your actual language: Don’t assume benchmarks in English apply.

Budget for real cost: That “cheap“ API isn’t cheap if you speak Thai.

If You Are A Model Provider

Publish tokenization statistics by language: Transparency enables informed choices

Invest in multilingual tokenizers:The next generation of models should do better

Consider per-character pricing: Radical, but would eliminate the disparity

The Bigger Picture

This is a case study in how technical decisions have social consequences.

Nobody at OpenAI decided to charge Swahili speakers more. But the system they built was optimized for English as it was predominantly trained on English.

As AI becomes infrastructure - as important as electricity or internet - these disparities matter. The 90% of the world that doesn’t speak English as first language is being charged a tax they never agreed to.

And most of them don’t know it exists.

Do It Yourself

Go to OpenAI's tokenizer tool and paste the same sentence in different languages.

Watch the token count change. Then ask yourself: is this the foundation we want to build global AI infrastructure on?

If this issue made you think differently about AI fairness, share it with someone building for non-English markets.

— Anirudh